mat <- matrix(rnorm(20), nrow = 5)

bcr(mat) <- TRUE # `bcr` is short-hand for `broadcaster`

print(mat)

#> [,1] [,2] [,3] [,4]

#> [1,] -0.6264538 -0.8204684 1.5117812 -0.04493361

#> [2,] 0.1836433 0.4874291 0.3898432 -0.01619026

#> [3,] -0.8356286 0.7383247 -0.6212406 0.94383621

#> [4,] 1.5952808 0.5757814 -2.2146999 0.82122120

#> [5,] 0.3295078 -0.3053884 1.1249309 0.59390132

#> broadcasterPractical Applications

Broadcasting comes up frequent enough in real world problems. This page gives a few examples of these.

1 Sweep

Anytime you would normally use sweep(), you can now use broadcasting, which is faster and more memory-efficient.

Consider for example the following matrix:

We want to scale each column of this matrix, by subtracting its mean and dividing by its standard deviation.

This can be done using sweep() like so:

scaled <- sweep(mat, 2, colMeans(mat), FUN = "-")

scaled <- sweep(scaled, 2, matrixStats::colSds(mat), FUN = "/")

print(scaled)

#> [,1] [,2] [,3] [,4]

#> [1,] -0.78636077 -1.4287607 0.9831766 -1.0853730

#> [2,] 0.05657773 0.5267275 0.2346563 -1.0235351

#> [3,] -1.00401553 0.9018514 -0.4399058 1.0418477

#> [4,] 1.52544305 0.6588265 -1.5030097 0.7780561

#> [5,] 0.20835553 -0.6586446 0.7250827 0.2890044

#> broadcasterBut it can be done much faster and more memory-efficiently with broadcasting like so:

means <- matrix(colMeans(mat), nrow = 1L)

sds <- matrix(matrixStats::colSds(mat), nrow = 1L)

bcr(means) <- bcr(sds) <- TRUE

scaled <- (mat - means) / sds

print(scaled)

#> [,1] [,2] [,3] [,4]

#> [1,] -0.78636077 -1.4287607 0.9831766 -1.0853730

#> [2,] 0.05657773 0.5267275 0.2346563 -1.0235351

#> [3,] -1.00401553 0.9018514 -0.4399058 1.0418477

#> [4,] 1.52544305 0.6588265 -1.5030097 0.7780561

#> [5,] 0.20835553 -0.6586446 0.7250827 0.2890044

#> broadcasterThe larger the matrix mat becomes, the more advantageous it becomes to use broadcasting rather than sweeping.

2 Bind arrays

The abind() function, from the package of the same name, allows one to bind arrays along any arbitrary dimensions (not just along rows or columns).

Unfortunately, abind() does not support broadcasting, which can lead to frustrations such as the following:

(x <- array(1:27, c(3,3,3)))

(y <- array(1L, c(3,3,1)))

abind::abind(x, y, along = 2)

#> Error in abind(x, y, along = 2) :

#> arg 'X2' has dims=3, 3, 1; but need dims=3, X, 3Here, abind() is complaining about the dimensions not fitting perfectly.

But intuitively, binding x and y should be possible, with dimension 3 from array y being broadcasted to size 3.

The bind_array() function provided by the ‘broadcast’ package can bind the arrays without problems:

x <- array(1:27, c(3,3,3))

y <- array(1L, c(3,3,1))

bind_array(list(x, y), 2)

#> , , 1

#>

#> [,1] [,2] [,3] [,4] [,5] [,6]

#> [1,] 1 4 7 1 1 1

#> [2,] 2 5 8 1 1 1

#> [3,] 3 6 9 1 1 1

#>

#> , , 2

#>

#> [,1] [,2] [,3] [,4] [,5] [,6]

#> [1,] 10 13 16 1 1 1

#> [2,] 11 14 17 1 1 1

#> [3,] 12 15 18 1 1 1

#>

#> , , 3

#>

#> [,1] [,2] [,3] [,4] [,5] [,6]

#> [1,] 19 22 25 1 1 1

#> [2,] 20 23 26 1 1 1

#> [3,] 21 24 27 1 1 1bind_array() is also considerably faster and more memory efficient than abind().

3 Compute on all possible pairs

Suppose you have 2 vectors of character strings, and you want to calculate the distance (i.e. dissimilarity) all possible pairs of these strings.

In base , this would require a either a loop (which is slow), or repeating the vectors several times (which requires more memory), or use of the outer() function (which is both slow and requires lots of memory).

The ’broadcasted way to do this, is to make the vectors orthogonal, and then calculate the distances between the strings of the orthogonal vectors - this is both fast and memory efficient.

For example:

x <- array(month.name, c(12, 1))

y <- array(month.abb, c(1, 12))

out <- bc.str(x, y, "levenshtein")

dimnames(out) <- list(month.name, month.abb)

print(out)

#> Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec

#> January 4 7 5 6 5 5 5 6 7 7 7 7

#> February 7 5 6 7 6 7 7 7 7 8 8 7

#> March 4 5 2 4 3 5 5 5 5 4 5 4

#> April 5 5 4 2 5 5 4 4 5 5 5 5

#> May 2 3 1 3 0 3 3 3 3 3 3 3

#> June 2 4 4 4 4 1 2 3 4 4 4 4

#> July 3 4 4 4 3 2 1 3 4 4 4 4

#> August 6 6 6 5 6 5 5 3 6 5 6 6

#> September 9 7 8 7 9 9 9 9 6 8 9 8

#> October 7 6 6 6 7 7 7 7 6 4 6 6

#> November 8 6 7 7 8 8 8 8 7 8 5 7

#> December 8 6 7 7 8 8 8 8 7 7 8 5

4 Modify Array Along a Dimension

4.1 Along columns

Consider the following array:

x <- array(sample(0:9), c(3,3,3))

print(x)

#> , , 1

#>

#> [,1] [,2] [,3]

#> [1,] 9 3 1

#> [2,] 5 7 0

#> [3,] 6 8 2

#>

#> , , 2

#>

#> [,1] [,2] [,3]

#> [1,] 4 6 8

#> [2,] 9 3 1

#> [3,] 5 7 0

#>

#> , , 3

#>

#> [,1] [,2] [,3]

#> [1,] 2 5 7

#> [2,] 4 6 8

#> [3,] 9 3 1Suppose one wishes to add 10 to all values of the second column, and 100 to all values of the third column.

This can be done with ‘broadcast’, by creating a modification vector whose dimensional direction is aligned with - in this case - the columns, and performing broadcasted addition between the array and said vector.

We don’t want to change the first column, so we add 0 to the first column.

So we can do this as follows:

v <- array(c(0, 10, 100), c(1, 3))

print(v)

#> [,1] [,2] [,3]

#> [1,] 0 10 100

bcr(x) <- bcr(v) <- TRUE

x + v

#> , , 1

#>

#> [,1] [,2] [,3]

#> [1,] 9 13 101

#> [2,] 5 17 100

#> [3,] 6 18 102

#>

#> , , 2

#>

#> [,1] [,2] [,3]

#> [1,] 4 16 108

#> [2,] 9 13 101

#> [3,] 5 17 100

#>

#> , , 3

#>

#> [,1] [,2] [,3]

#> [1,] 2 15 107

#> [2,] 4 16 108

#> [3,] 9 13 101

#>

#> broadcaster

4.2 Along rows

Suppose that, instead of adding {0, 10, 100} to the 3 columns, we wish to add these to the rows instead.

This again can by creating a modifcation vector - this time aligned with the rows.

Note that in the ‘broadcast’ package, a vector without dimensions is interpreted as a vector aligned with rows (confusingly called a “column vector” in mathematics). Like so:

v <- c(0, 10, 100) # effectively the same as array(c(0, 10, 100), c(3, 1))

print(v)

#> [1] 0 10 100

bcr(x) <- bcr(v) <- TRUE

x + v

#> , , 1

#>

#> [,1] [,2] [,3]

#> [1,] 9 3 1

#> [2,] 15 17 10

#> [3,] 106 108 102

#>

#> , , 2

#>

#> [,1] [,2] [,3]

#> [1,] 4 6 8

#> [2,] 19 13 11

#> [3,] 105 107 100

#>

#> , , 3

#>

#> [,1] [,2] [,3]

#> [1,] 2 5 7

#> [2,] 14 16 18

#> [3,] 109 103 101

#>

#> broadcaster

4.3 Along layers

As a final example, we want to add {0, 10, 100} to the layers (3rd dimension) of x.

As you may have guessed, one can do so by creating a modification vector aligned with the layers, and adding that vector to the array, like so:

v <- array(c(0, 10, 100), c(1, 1, 3))

print(v)

#> , , 1

#>

#> [,1]

#> [1,] 0

#>

#> , , 2

#>

#> [,1]

#> [1,] 10

#>

#> , , 3

#>

#> [,1]

#> [1,] 100

bcr(x) <- bcr(v) <- TRUE

x + v

#> , , 1

#>

#> [,1] [,2] [,3]

#> [1,] 9 3 1

#> [2,] 5 7 0

#> [3,] 6 8 2

#>

#> , , 2

#>

#> [,1] [,2] [,3]

#> [1,] 14 16 18

#> [2,] 19 13 11

#> [3,] 15 17 10

#>

#> , , 3

#>

#> [,1] [,2] [,3]

#> [1,] 102 105 107

#> [2,] 104 106 108

#> [3,] 109 103 101

#>

#> broadcaster

5 Grouped Broadcasting

5.1 Casting with equal group sizes

We have a matrix of points (ranging from 0 to 100) students (n = 3) have achieved in for 2 homework exercises:

x <- cbind(

student = rep(1:3, each = 2),

homework = rep(1:2, 3),

points = sample(0:100, 6)

)

print(x)

#> student homework points

#> [1,] 1 1 39

#> [2,] 1 2 43

#> [3,] 2 1 24

#> [4,] 2 2 69

#> [5,] 3 1 38

#> [6,] 3 2 50However, the teacher has realised that the second homework assignment was way more difficult than the first, and thus decided the second home work assignments should be weigh more - 2 times more to be precise.

Thus the points for homework assignment 2 should be multiplied by 2. There are various ways to do this. For the sake of demonstration, an approach using acast() is shown here, as this is an example of a grouped broadcasted operation.

‘broadcast’ allows users to cast subsets of an array onto a new dimension, based on some grouping factor - in this case the homework ID is the grouping factor, and the following will do the job:

margin <- 1L # we cast from the rows, so margin = 1

grp <- as.factor(x[, "homework"]) # factor to define which rows belongs to which group

levels(grp) <- c("assignment 1", "assignment 2") # names for the new dimension

out <- acast(x, margin, grp) # casting is performed here

bcr(out) <- TRUE

print(out)

#> , , assignment 1

#>

#> student homework points

#> [1,] 1 1 39

#> [2,] 2 1 24

#> [3,] 3 1 38

#>

#> , , assignment 2

#>

#> student homework points

#> [1,] 1 2 43

#> [2,] 2 2 69

#> [3,] 3 2 50

#>

#> broadcasterNotice that the dimension-names of the new dimension (dimension 3) are equal to levels(grp).

With the group-cast array, one can use broadcasting to easily do things like multiply the values in each group with a different value.

In this case, we need to multiply the values for assignment 2 with 2, and leave the rest as-is.

Like so:

# create the multiplication factor array:

mult <- array(

1,

dim = c(1, 3, 2),

dimnames = list(

NULL,

c("mult_id", "mult_homework", "mult_points"),

c("assignment 1", "assignment 2")

)

)

mult[, "mult_points", c("assignment 1", "assignment 2")] <- c(1, 2)

bcr(mult) <- TRUE

print(mult)

#> , , assignment 1

#>

#> mult_id mult_homework mult_points

#> [1,] 1 1 1

#>

#> , , assignment 2

#>

#> mult_id mult_homework mult_points

#> [1,] 1 1 2

#>

#> broadcaster

# grouped broadcasted operation:

out2 <- out * mult

dimnames(out2) <- dimnames(out)

print(out2)

#> , , assignment 1

#>

#> student homework points

#> [1,] 1 1 39

#> [2,] 2 1 24

#> [3,] 3 1 38

#>

#> , , assignment 2

#>

#> student homework points

#> [1,] 1 2 86

#> [2,] 2 2 138

#> [3,] 3 2 100

#>

#> broadcasterNow the array needs to be reverse-cast back to its original shape.

Reverse-casting an array can be done be combining asplit() with bind_array():

asplit(out2, ndim(out2)) |> bind_array(along = margin, name_along = FALSE)

#> student homework points

#> [1,] 1 1 39

#> [2,] 2 1 24

#> [3,] 3 1 38

#> [4,] 1 2 86

#> [5,] 2 2 138

#> [6,] 3 2 100…though the order of, in this case, the rows (because margin = 1) will not necessarily be the same as the original array.

5.2 Casting with unequal group sizes

The casting arrays also works when the groups have unequal sizes, though there are a few things to keep in mind.

Let’s start with a different input array:

x <- cbind(

id = c(rep(1:3, each = 2), 1),

grp = c(rep(1:2, 3), 2),

val = rnorm(7)

)

print(x)

#> id grp val

#> [1,] 1 1 -0.5428883

#> [2,] 1 2 -0.4333103

#> [3,] 2 1 -0.6494716

#> [4,] 2 2 0.7267507

#> [5,] 3 1 1.1519118

#> [6,] 3 2 0.9921604

#> [7,] 1 2 -0.4295131Once again, the acast() to fill the gaps, otherwise an error is called.

Thus one can cast in this case like so:

grp <- as.factor(x[, 2])

levels(grp) <- c("a", "b")

margin <- 1L

out <- acast(x, margin, grp, fill = TRUE)

print(out)

#> , , a

#>

#> id grp val

#> [1,] 1 1 -0.5428883

#> [2,] 2 1 -0.6494716

#> [3,] 3 1 1.1519118

#> [4,] NA NA NA

#>

#> , , b

#>

#> id grp val

#> [1,] 1 2 -0.4333103

#> [2,] 2 2 0.7267507

#> [3,] 3 2 0.9921604

#> [4,] 1 2 -0.4295131Notice that some values are missing ( NA ); if some groups have unequal number of elements, acast() needs to fill the gaps with missing values. By default, gaps are filled with NA if x is atomic, and with list(NULL) if x is recursive. The user can change the filling value through the fill_value argument.

Once again, we can get the original array back when we’re done like so:

asplit(out, ndim(out)) |> bind_array(along = margin)

#> id grp val

#> a.1 1 1 -0.5428883

#> a.2 2 1 -0.6494716

#> a.3 3 1 1.1519118

#> a.4 NA NA NA

#> b.1 1 2 -0.4333103

#> b.2 2 2 0.7267507

#> b.3 3 2 0.9921604

#> b.4 1 2 -0.4295131… but we do keep the missing values when the groups have an unequal number of elements.

6 Nested Binding with Broadcasting

Consider the following 2 lists x and y:

x <- list(

group1 = list(

class1 = list(

height = rnorm(10, 170),

weight = rnorm(10, 80),

sex = sample(c("M", "F", NA), 10, TRUE)

),

class2 = list(

height = rnorm(10, 170),

weight = rnorm(10, 80),

sex = sample(c("M", "F", NA), 10, TRUE)

)

),

group2 = list(

class1 = list(

height = rnorm(10, 170),

weight = rnorm(10, 80),

sex = sample(c("M", "F", NA), 10, TRUE)

),

class2 = list(

height = rnorm(10, 170),

weight = rnorm(10, 80),

sex = sample(c("M", "F", NA), 10, TRUE)

)

)

)

y <- list(

group1 = list(

class1 = list(

smoker = sample(c(TRUE, FALSE), 10, TRUE)

),

class2 = list(

smoker = sample(c(TRUE, FALSE), 10, TRUE)

)

),

group2 = list(

class1 = list(

smoker = sample(c(TRUE, FALSE), 10, TRUE)

),

class2 = list(

smoker = sample(c(TRUE, FALSE), 10, TRUE)

)

)

)We wish 2 merge these 2 lists, such that after every list-element “sex” in list x, the element “smoker” follows in list y.

This can be done with a double for-loop in , but if both lists are large, this becomes slow. With the ‘broadcast’ package, one can cast both lists as dimensional, and then use broadcasted binding to merge x and y together with ease. Moreover, if a list can be represented dimensionally, it is advised to make the list dimensional as that will make further analyses, manipulations, and sub-set operations much easier and faster compared to a hierarchical list.

So let’s first cast both lists from hierarchical lists to dimensional lists:

(x2 <- cast_hier2dim(x, direction.names = 1))

#> , , group1

#>

#> class1 class2

#> height numeric,10 numeric,10

#> weight numeric,10 numeric,10

#> sex character,10 character,10

#>

#> , , group2

#>

#> class1 class2

#> height numeric,10 numeric,10

#> weight numeric,10 numeric,10

#> sex character,10 character,10

(y2 <- cast_hier2dim(y, direction.names = 1))

#> , , group1

#>

#> class1 class2

#> smoker logical,10 logical,10

#>

#> , , group2

#>

#> class1 class2

#> smoker logical,10 logical,10Now bind these lists together using bind_array(), which can handle recursive arrays (i.e. dimensional lists) natively with ease:

z <- bind_array(list(x2, y2), 1L)

print(z)

#> , , group1

#>

#> class1 class2

#> height numeric,10 numeric,10

#> weight numeric,10 numeric,10

#> sex character,10 character,10

#> smoker logical,10 logical,10

#>

#> , , group2

#>

#> class1 class2

#> height numeric,10 numeric,10

#> weight numeric,10 numeric,10

#> sex character,10 character,10

#> smoker logical,10 logical,10What we’ve just done would require an expensive double for-loop in base . But notice how easily we’ve achieved this goal with ‘broadcast’: just 2 steps - casting and then binding!

If you really want z to be a nested list, you can cast it back to hierarchical:

z2 <- cast_dim2hier(z)Remember that elements of a lists do not contain the actual data; they point to the data; casting lists from dimensional to hierarchical and vice-versa only makes inexpensive shallow copies, not deep copies. It’s also quite fast.

7 Nested Arithmetic with Broadcasting

We have a class of students who scored certain amounts of points for homework exercises. The software used for collecting the points for these homework exercises produces on object that is read by as a nested list, like so:

x <- list(

student1 = list(

homework1 = sample(0:100, 5),

homework2 = sample(0:100, 5),

homework3 = sample(0:100, 5)

),

student2 = list(

homework1 = sample(0:100, 5),

homework2 = sample(0:100, 5),

homework3 = sample(0:100, 5)

),

student3 = list(

homework1 = sample(0:100, 5),

homework2 = sample(0:100, 5),

homework3 = sample(0:100, 5)

)

)Since all values are numbers, the teacher would like the list to be turned in a numeric array, to make analysis easier.

This can be done with the ‘broadcast’ package with the following steps.

First, turn the nested list into a shallow dimensional list:

x2 <- cast_hier2dim(x, in2out = FALSE, direction.names = 1L)

print(x2)

#> homework1 homework2 homework3

#> student1 integer,5 integer,5 integer,5

#> student2 integer,5 integer,5 integer,5

#> student3 integer,5 integer,5 integer,5Second, turn the dimensional list into an atomic array using cast_shallow2atomic():

x3 <- cast_shallow2atomic(x2, 1L)

print(x3)

#> , , homework1

#>

#> student1 student2 student3

#> [1,] 61 32 55

#> [2,] 19 3 70

#> [3,] 28 52 90

#> [4,] 41 85 49

#> [5,] 59 88 72

#>

#> , , homework2

#>

#> student1 student2 student3

#> [1,] 64 23 19

#> [2,] 27 36 97

#> [3,] 77 14 92

#> [4,] 30 13 74

#> [5,] 11 80 1

#>

#> , , homework3

#>

#> student1 student2 student3

#> [1,] 92 81 2

#> [2,] 79 96 37

#> [3,] 43 95 14

#> [4,] 73 71 27

#> [5,] 25 52 54Now that we have a numeric array, broadcasted numeric operations can be performed on it. In this case, the teacher would like to multiply the scores of the second homework assignment by 2, as it was deemed much harder than the other 2 homework assignments.

This can be done like so:

# creating multiplier array:

multiplier <- vector2array(c(1L, 2L, 1L), 3L, 3L, TRUE)

dimnames(multiplier)[[3L]] <- dimnames(x3)[[3L]]

print(multiplier)

#> , , homework1

#>

#> [,1]

#> [1,] 1

#>

#> , , homework2

#>

#> [,1]

#> [1,] 2

#>

#> , , homework3

#>

#> [,1]

#> [1,] 1

#>

#> broadcaster

# broadcasted multiply x3 with multiplier:

broadcaster(x3) <- TRUE

x3 * multiplier

#> , , 1

#>

#> student1 student2 student3

#> [1,] 61 32 55

#> [2,] 19 3 70

#> [3,] 28 52 90

#> [4,] 41 85 49

#> [5,] 59 88 72

#>

#> , , 2

#>

#> student1 student2 student3

#> [1,] 128 46 38

#> [2,] 54 72 194

#> [3,] 154 28 184

#> [4,] 60 26 148

#> [5,] 22 160 2

#>

#> , , 3

#>

#> student1 student2 student3

#> [1,] 92 81 2

#> [2,] 79 96 37

#> [3,] 43 95 14

#> [4,] 73 71 27

#> [5,] 25 52 54

#>

#> broadcasterThus we started off with a nested list, through which one cannot broadcast and for which little to no vectorized operators are available.

By turning the nested list into a dimensional atomic array, both broadcasted and vectorized operations can more easily be used.

8 Compute confidence/credible interval of a spline

The sd_lc() function computes the standard deviation for a linear combination of random variables. One of its notable use-cases is to compute the confidence interval (or credible interval if you’re going Bayesian) of a spline.

Packages like ‘mgcv’ provides the user to plot and analyse the smoothers with confidence from a fitted GAM.

But sometimes one may want to compute spline intervals manually, like in the following cases:

- Some packages (like the ‘INLA’ package), do not provide a clear and consistent way to compute the confidence intervals of a spline.

- One may want to use a type of spline not covered by the package you’re using (this is especially true when using a novel spline). In such cases, you’ll have to compute the spline mean and confidence/credible intervals on your own.

So one practical application of sd_lc() is computing confidence/credible intervals of splines when a package does not provide that service in a user-friendly way.

Please note that the sd_lc() functions is just a linear algebra function, not specific to any type/class of model (so this function assumes you know what you’re doing).

For a demonstration of sd_lc(), the following will be done:

- a GAM model will be fitted using the ‘mgcv’ package. The model will consist of several low-rank thin-plate (lrtp) smoothers.

- One of these lrtp splines will be plotted with credible intervals using the

plot()method provided by ‘mgcv’ itself. - The sd_lc() function will be used to re-create compute the credible intervals of the spline from step 2 and re-create the plot.

For the sake of this demonstration, I’ll forgo some crucially mandatory steps in statistical modelling - like data exploration, model diagnostics, and model interpretation.

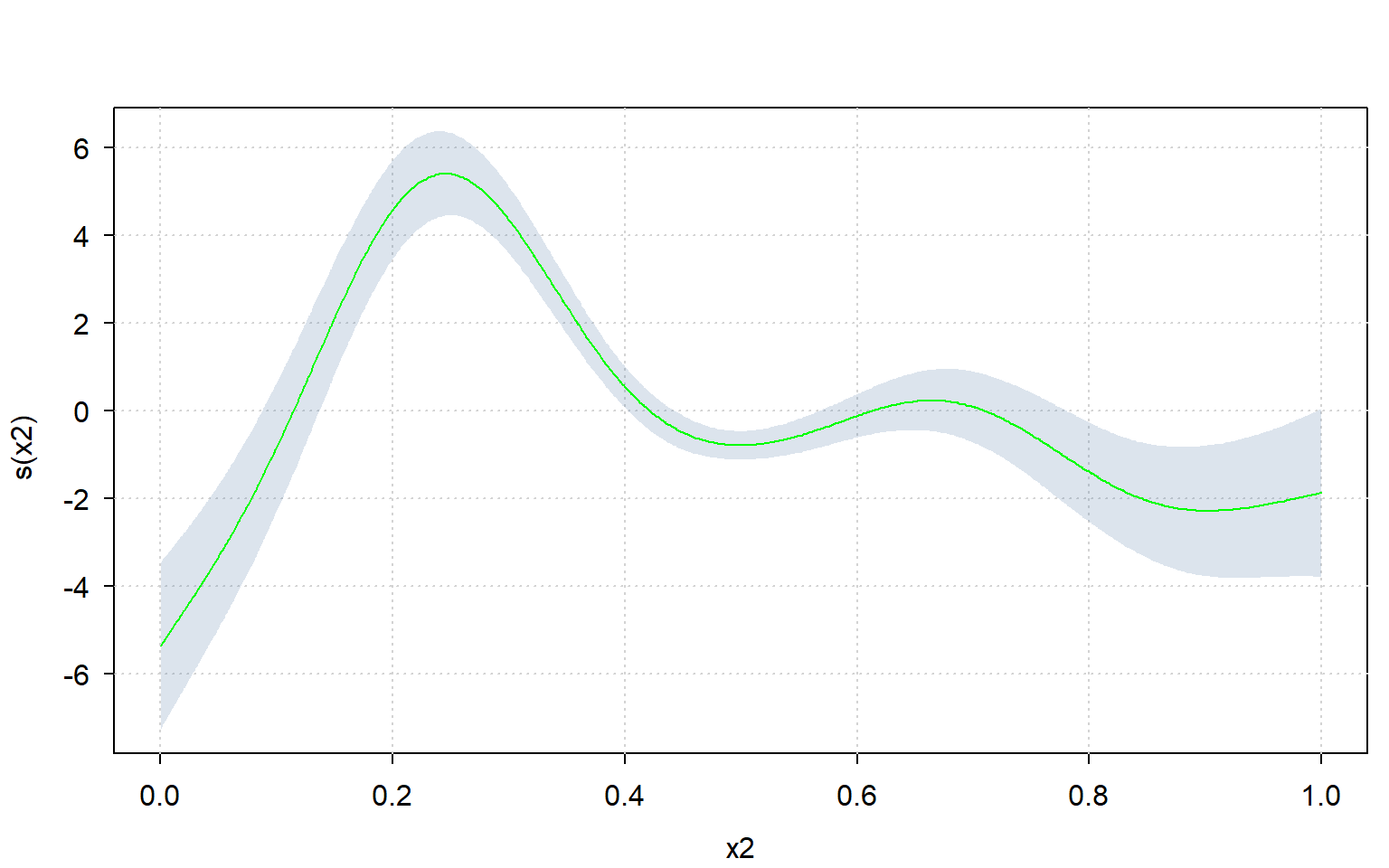

First, let’s create some data, fit a GAM on it, and plot the spline for variable “x2”:

d <- mgcv::gamSim(7, n = 1000L, dist = "normal", scale = 2L)

#> Gu & Wahba 4 term additive model, correlated predictors

m <- mgcv::gam(y ~ s(x1) + s(x2) + s(x3), data = d)

par(mfrow = c(1,1))

plot(m, select = 2)

Now extract the required model parameters to recreate this plot:

# get names of relevant coefficients:

coeffnames <- names(coef(m))

coeffnames <- grepv("x2", coeffnames)

print(coeffnames)

#> [1] "s(x2).1" "s(x2).2" "s(x2).3" "s(x2).4" "s(x2).5" "s(x2).6" "s(x2).7"

#> [8] "s(x2).8" "s(x2).9"

# get necessary model parameters (i.e. X, b, vc)

# but only for relevant coefficients:

b <- coef(m)[coeffnames]

X <- predict(m, type = "lpmatrix")[, coeffnames, drop = FALSE]

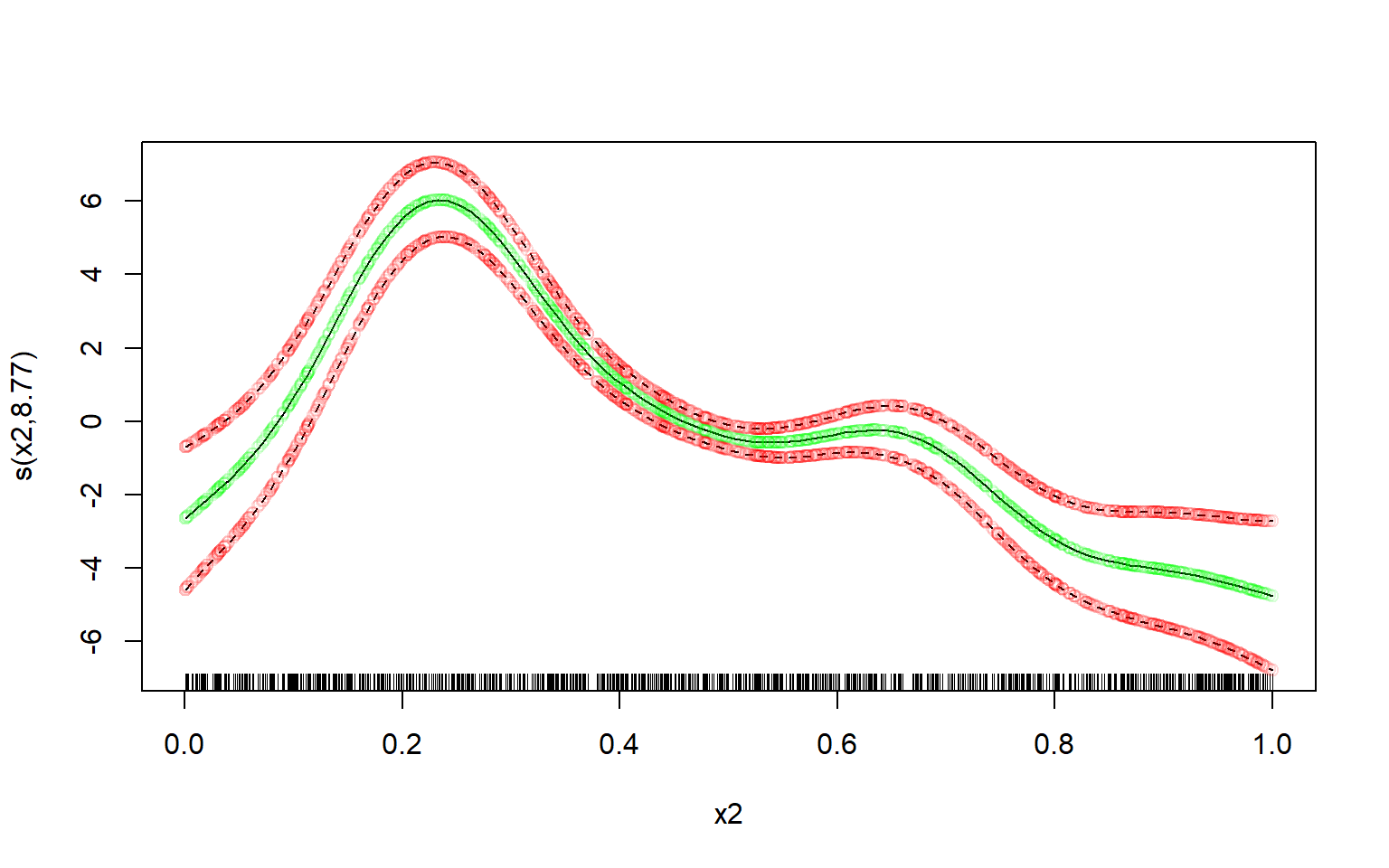

vc <- vcov(m)[coeffnames, coeffnames, drop = FALSE]Computing the means of the spline is trivial:

means <- X %*% bComputing the (~ 95%) credible interval is a bit trickier - at least if you want to avoid unnecessary copies/memory-usage when you have a large dataset, and don’t want to use a slow for-loop.

But using the sd_lc() function it becomes relatively easy to compute it very memory-efficiently, even with a very large dataset:

st.devs <- sd_lc(X, vc) # get standard deviations efficiently

mult <- 2 # mgcv uses multiplier of 2; approx. 95% credible interval

lower <- means - mult * st.devs

upper <- means + mult * st.devsNow let’s plot the manually computed spline over the original one, and see how well our own estimate fits.

I’ll use transparent green dots for the mean, and transparent red dots for the credible interval:

# defines some colours:

green <- rgb(red = 0, green = 1, blue = 0, alpha = 0.15)

red <- rgb(red = 1, green = 0, blue = 0, alpha = 0.15)

# original plot from the 'mgcv' R-package:

par(mfrow = c(1,1))

plot(m, select = 2)

# our own re-creation plotted over it:

lc <- data.frame(

x = d$x2,

means = means,

lower = lower,

upper = upper

)

lc <- lc[order(lc$x),]

lc %$% points(x = x, y = means, col = green) # plot our means

lc %$% points(x = x, y = lower, col = red) # plot our lower bound

lc %$% points(x = x, y = upper, col = red) # plot our upper bound

Perfect fit.

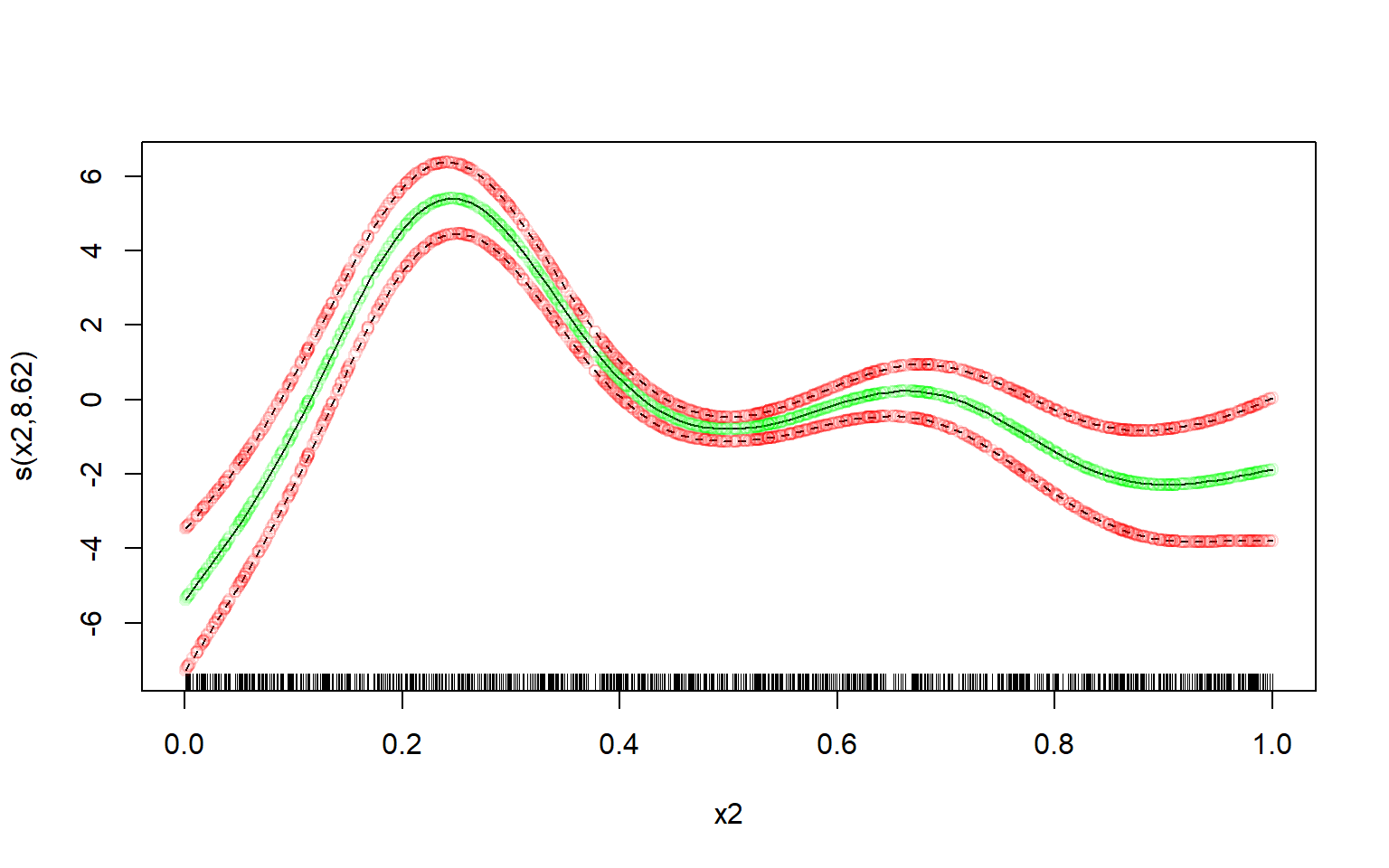

Since one can very easily re-create the plot using sd_lc(), one can also use a different plotting framework altogether.

Let’s use the ‘tinyplot’ framework to compute the plot manually:

library(tinyplot)

tinytheme("clean")

lc %$% tinyplot( # plot confidence interval

x = x, ymin = lower, ymax = upper, type = "ribbon",

xlab = "x2", ylab = "s(x2)",

)

lc %$% tinyplot( # plot means over it

x = x, y = means, type = "l",

add = TRUE, col = "green"

)